Recently, I went to a bar in Tallinn with an acquaintance from the tech industry.

“I just interviewed at Revolut,” he said.

“How did it go?”

“Rejected after stage two - the product-sense round.”

That floored me. He’s a seasoned product leader with years of fintech experience under his belt.

“Why?”

“Feedback said I lacked product-strategy skills.”

That didn’t add up. I’ve seen him talk, write and explain strategy better than the top percentile of managers.

Then he explained the process: the interviewer uploads the call transcript, and an AI scores the conversation for signals - like whether you probed the business rationale behind the case. If the transcript doesn’t hit specific keywords, the score dips.

At BirMarket, we’ve been using AI in interviews for months. However, I’ve always been cautious about not turning it into a checkbox exercise.

In general we’re in the middle of an inevitable transition.

HireVue (an AI recruitment company) has surveyed 4,000 companies globally. The results show that AI adoption in hiring has risen sharply by 25% year-over-year (with 72% of companies relying on AI for assessment purposes).

Although I’d advise taking this data with a grain of salt (an AI hiring company has a vested interest), directionally it is correct.

At the same time such a spike in assisted hiring isn’t without its pitfalls.

AI can accelerate hiring; it can also flatten nuance if you let it. And hiring is the highest ROI decision any org can make.

In this article, I’ll share:

practical ways to blend human judgment with AI scoring,

pitfalls to avoid when relying on transcripts,

and advanced tactics to scale your interviews - like real-time voice AI (with project links that you can start using immediately)

By the end, you’ll have a clear, repeatable framework for faster screens, stronger decisions, and a better candidate experience.

🧬 Today’s Article

Blending AI scoring with human judgement. Transcribing Interviews. Assessing transcripts. Providing nuanced contextual awareness. Layering case questions.

🔒 Transcript pitfalls. Limited context. Language error rates. Shifting perspectives.

🔒 Advanced tools to scale your interviews. Multi-modal AI interviews. Use cases and limitations. Context provision. Spinning out a project with a downloadable file you can use immediately 🎁.

⚖️ Blending AI scoring with human judgment

Transcribing interviews

With the rise of AI interview platforms (Metaview, Manatal, Sloneek and others), it’s easy to think you need a complete workflow overhaul.

In reality, the most effective first step is much simpler: start using interview transcripts.

You can use Microsoft Teams (built-in transcription feature), Zoom auto-transcription or even use Notion live transcription features.

Download the transcript after each interview session. These texts become valuable data you can analyze with LLMs to identify key details, assess responses, and validate your own impressions.

At our company, we use transcripts from Microsoft Teams and view AI as an evaluation partner. This partnership, however, comes with certain ethical responsibilities.

Two Critical Rules for Ethical Use

Transparency is Mandatory: Always obtain candidate consent by letting them know that the interview will be transcribed for evaluation purposes.

Strict Access Control: Transcripts must never be shared publicly within the company. We limit access solely to the interview panel to avoid different “confirmation bias” incidents.

Assessing transcripts

The real challenge of using transcripts is avoiding subjective assessment.

Without a framework, it’s easy to find evidence in the text to support any pre-existing bias.

To ensure consistent and fair evaluation, your entire interview panel must agree on a unified set of qualification criteria.

Think of this as the critical context you must provide to an AI model.

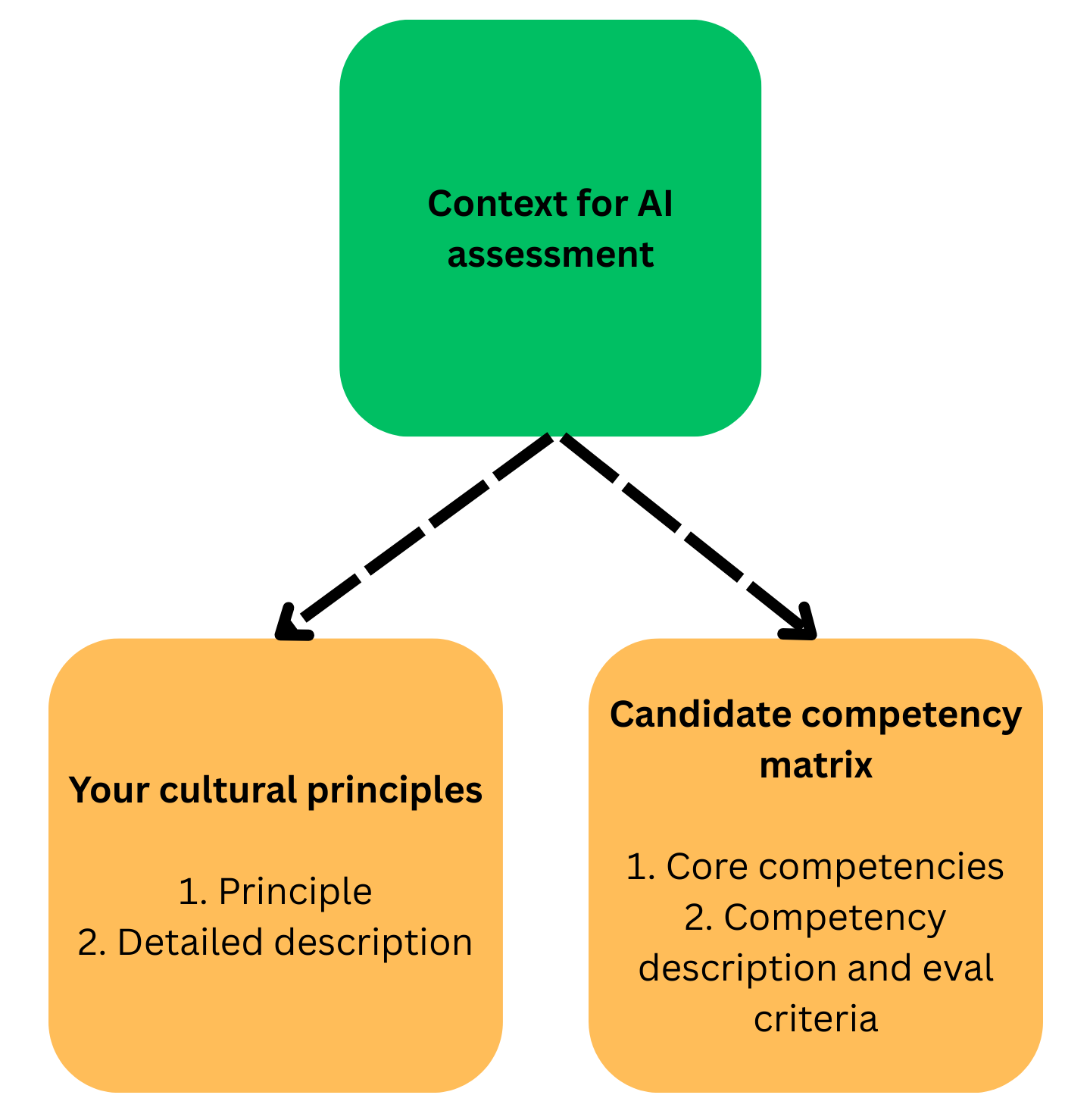

Context consists of your cultural principles and candidate competency matrix - all with detailed descriptions.

Without extensive and precise descriptions, both humans and AI models are forced to guess.

To eliminate this guesswork, codify your standards in a central document (e.g., a Word file or PDF) that serves as a single source of truth for evaluation.

Here’s an example for inputs that I use for one of my favorite principles in BirMarket:

4. You Don’t Rise to the Level of Your Goals, You Fall to the Level of Your Systems

Detailed Description:

Goals define where we want to go, but systems determine how we get there—and whether we arrive at all. A brilliant goal with a broken system leads to frustration and failure. A mediocre goal with an excellent system leads to predictable, repeatable success.This principle forces us to focus on our “how”. It’s about building and relentlessly refining our systems: our engineering pipelines, our design review processes, our communication rhythms, our hiring practices, and our customer support protocols. When we perfect the machinery of our work, outstanding outcomes become the natural byproduct. We invest our energy in building the best possible systems, trusting that they will effortlessly outperform the best possible goals.

Based on those inputs ask your LLM to do an assessment using your internal score.

Aside from the context, you should also set up a role for your assessor (e.g. VP of Product) and keep it consistent all across the hiring panel.

Contextual awareness

In a recent study, researchers chained multiple AI agents together and powered the whole thing with RAG. They stored all the CVs and related data in a database.