Replacing user research with AI

A manual on how to create a ~85% accurate synthetic AI user panel for UX studies and surveys.

The UX-legend Jakob Nielsen in his 2024 essay stated “… will AI be able to replace user research? No, then we get into that realm of the impossible.”.

That’s a bold statement, which made me curious.

He’s a godfather of user research, he should probably be right and see more nuance.

However, looking at it from another angle, it might as well be a conservative generalization.

A call to stick to old and tested means of how it was always done.

Reflecting on this, I’ve asked myself a crazy question - what would happen if I’ve replaced an entire user panel with AI?

The models have made a massive leap from 2024. Research has advanced a lot. Why not try it out? Is it really a “realm of the impossible”?

I’ve dived deep into the LLM-based human simulation (sims) research field. My goal was to try and build a practical low-code engine that can augment product discovery. To my surprise, it turned out to be possible.

There are caveats - it won’t replace the user research fully and won’t give you a razor-sharp 100% accuracy.

But it definitely works for preliminary testing of new ideas and discovery.

Here’s a deeply researched, insanely tactical guide (with an n8n json template included) - that will allow you to build, execute and endlessly scale the user research panels. Directly from your laptop at a fraction of a cost of a typical UX study.

🧬 Article Structure

Simulating humans. Human vs. an LLM, chain of thought vs. chain of feelings, behavioral topologies, fine tuning vs. prompting.

🔒 Building your own simulation. Full architecture, persona setup, evals, adding a memory layer, adding an evaluator + a downloadable n8n json which you can use immediately.

🤖 Simulating humans

Human vs. an LLM

First, LLMs think very differently from humans.

The vast corpus of data that most foundational models were trained on - isn’t representative of how people behave. Put simply, reddit threads only show a single side of human nature.

This can trigger some troubling racial or nationalist biases that are inherent in most viral discussions.

On average, the LLMs tend to sound more like rich, young and liberal Western people, because that’s what much of their training data reflects.

Secondly, LLMs are very rational. When given a complex mathematical question, you’ll get way less diversity from an LLM vs. a human.

In one classic game, each player picks a number from 11 to 20. You score points equal to your chosen number, plus a 20-point bonus if your number is exactly one less than your opponent’s.

LLMs almost always choose 19 or 20, producing very uniform answers. Humans, by contrast, select a much wider range of numbers, with an average choice of 17.

We humans sometimes make impulse decisions, driven by our emotions. LLMs don’t care how much tokens your query will consume - the budget is the limit. We humans are lazy and optimize for the least effort whenever possible.

Another issue with LLMs is the so-called sycophancy. It is the tendency of the model to please the user in order to produce a positive feedback.

Such a side-effect is a part of the initial model design and temperature settings. It’s ok when you embed the LLM into a consumer facing chat.

But you don’t want that when building a user simulation, it’s a recipe for bias.

Chain of thought vs. chain of feelings

The chain-of-thought concept has proven to be extremely efficient at solving logical problems and critical reasoning.

Chessmasters or mathematicians use chain-of-thought reasoning quite often.

But most regular users are not chessmasters.

When they are invited to a panel or a UX test, they come to talk and express their opinions (sometimes irrational and conflicting).

What guides them isn’t a chain of thought but rather a chain of feelings.

Do you track calories of what you eat?

First response:

Curiosity (intensity - 5 out of 10) according to my persona profile and OCEAN model. When was the last time that I’ve tracked calories? Probably never.

Second response:

Frustration (7 out of 10). Oh, I’ve overeaten yesterday. That second side of fries was an excess. I have no idea how much calories is that.

Third response:

Boredom (9 out of 10). Tracking calories, writing them down, it’s such a boring exercise. I have no idea how to do it and where to even find the information on calories.

Fourth response:

Frustration (9 out of 10). How would I know how much calories there is in the fries I’ve eaten yesterday?! Should I google it or something?

Each thought provokes a corresponding feeling, and its intensity depends on the persona profile (both socio-demographic and behavioral).

The feelings shift thoughts in very different directions compared to a chain of thought.

Behavioral topologies

There are many ways to classify human behavioral archetypes - DISC, MBTA etc

The approach that was tested with LLMs is called OCEAN.

It is deciphered as:

Openness (curiosity to new ideas),

Conscientiousness (level of self-organization),

Extraversion (pretty obvious),

Agreeableness (cooperation) and

Neuroticism (proneness to anxiety and stress).

Each one is measured on a numeric scale from 1 to 10.

A combination of those factors creates a unique persona profile.

There are studies that prove that prompting LLMs with very specific numeric OCEAN parameters can tilt the model towards more human-like responses (close to 85% overlap with human responses).

Fine tuning vs. prompting

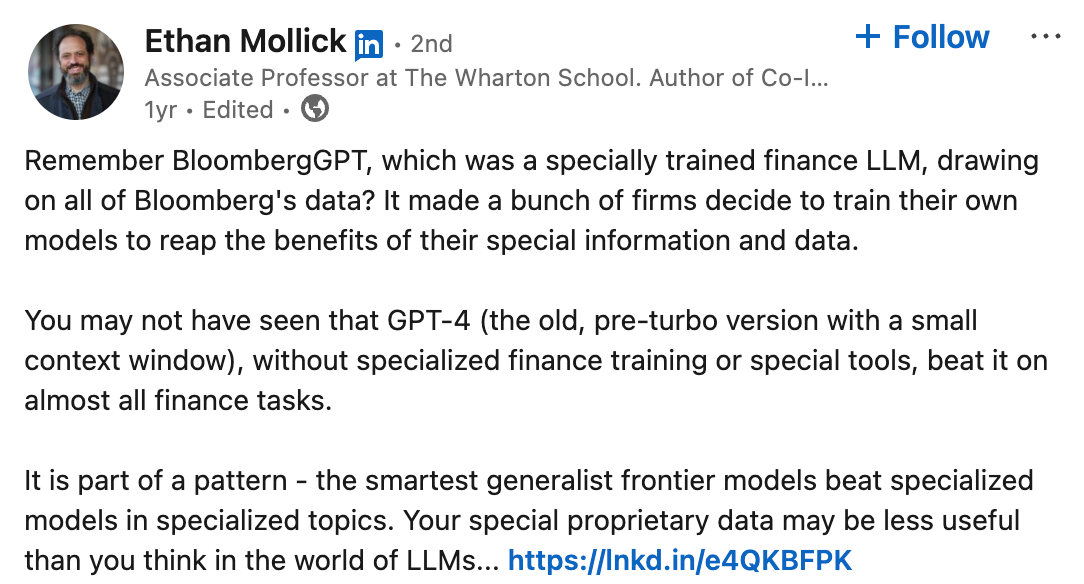

Ethan Mollick (a Wharton professor, knee-deep in AI) when referring to the infamous BloombergGPT (now defunct) mentioned that stronger generalist LLMs outperform fine-tuned specialist models based on inferior base LLMs.

Put simply, a ChatGPT-3.5 model, despite being fine-tuned on a massive corpus of legal data, will underperform compared to a generalist ChatGPT-4.0 base model.

It’s still possible to fine-tune a stronger base model and get better results. Studies show that such models deliver a 10–20% higher task accuracy and consistency over their generalist counterparts.

There are examples of this in human answer simulation. A team of researchers from Princeton, Google DeepMind, and NYU released a model called “Centaur”.

They took the LLaMA-70B base model and fine-tuned it on survey and trial data from 60,000 respondents across 160 different experiments.

The corresponding study has shown that the fine-tuned model was much better at predicting human choices than the untuned base LLaMA model.

The flipside is cost: it took 80 GPUs and five days of non-stop training, costing tens of thousands of $$$.

Alternatively, you can deploy the model (it’s available in the Hugging Face repository here), but you’ll need to rent a solid GPU cluster to make inferences. This is also costly, though measured in the hundreds of $$$.

My take is that you can sacrifice a marginal percentage of accuracy for speed, cost, and convenience with proper prompting. Alternatively, you can supplement prompts with memory so that the model won’t deviate much between responses.